Poised to transform the modern workforce, how should artificial collaborators be controlled by humans?

To invent the lightbulb, Thomas Edison passed an electric current through a simple filament. His invention made the world safer, helped industries expand exponentially, and extended humanity’s productive and leisure time.

Now imagine we are in the late 1800s and that brand-new bulb can independently determine how bright to shine based on what the room’s occupants are doing. Needlepoint, reading or whittling would require the brightest light. Rocking an infant to sleep would require dimming. Playing piano would require no light at all, because the bulb was previously informed that the musician in the family performs with her eyes closed.

This imaginary bulb is no longer just a technological object to be manipulated by humans. It can act on its own, based on rules and information delivered by its masters.

This is what we mean when we say it has agency. It is an (imaginary) agent.

Today, real AI agents are the latest development of AI, and they have recently created much fanfare. So, what is the big deal, and what are the opportunities and risks?

The concerns around AI agents stem from the fact that they are capable of understanding objectives and policies and taking action accordingly—just like a human would. So far, among all the tools based on large language models (LLMs), AI is limited to being our learning and thinking collaborators. With autonomy, decision-making, and action capability, AI agents are our venture partners. An AI agent might make executive decisions on our behalf based on what it thinks it knows about us.

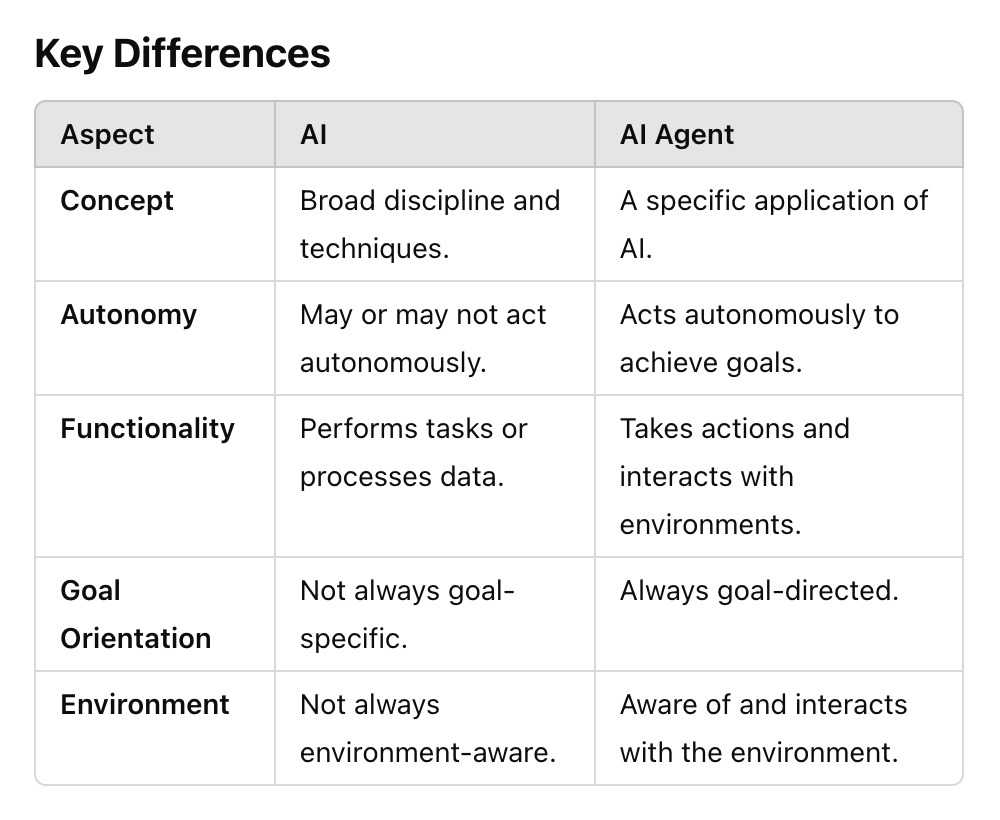

What are AI agents? What are the differences between AI and AI agents?

Given ChatGPT’s origins in AI, there is no better way to get answers to these questions than to ask ChatGPT. Here is what it shared:

AI Agents

- Definition: An AI agent is an entity or program that uses AI to make decisions and take actions autonomously to achieve specific goals within an environment.

- Scope:

- Autonomy: An AI agent can perceive its environment, reason about it, and act on its own without continuous human intervention.

- Goal-Directed Behavior: It operates with a purpose, striving to optimize or achieve specific objectives.

- Interaction: AI agents interact with their environment or other agents to gather data and execute actions.

- Key Components:

- Perception: Ability to sense the environment (e.g., cameras, sensors, or data inputs).

- Reasoning/Decision-Making: Logic or algorithms to decide the best course of action.

- Action: Mechanism to perform tasks (e.g., sending commands, moving, or generating outputs).

So what is the difference between a regular AI based on LLMs and an AI agent?

It would be equivalent to this real-life scenario: Let’s say you have some money you want to invest. Regular AI is like a financial planner who is paid by the hour. He is only here to brainstorm investment strategies with you based on your needs and requirements. Ultimately, you execute the strategy on your own, and you have complete control over how much of her advice you want to follow.

An AI agent, on the other hand, is like a fund manager. You give him the money and he makes all the investment decisions for you. You can exit the relationship at any time but if any action has already been taken on your behalf, you will ultimately have to pay for any damage caused by the AI agent’s actions, since the AI agent is acting on your behalf.

However, there are some key differences between your relationship with your fund manager and that with an AI agent. First, your fund manager is clearly aligned with your interests: if you make more money, he will also make more money. The fund you invest in has clear guidance on the scope—for example, geography, industry, size, and characteristics. The list of companies the fund has invested in is also completely transparent. You can also set up alerts in your account in order to track performance.

Since more than half of US households own mutual funds, and that number is trending up, we can assume the fund management model is one that most stakeholders are comfortable with. Can we apply what we have learned in the financial world regarding transparency, governance, and regulations to AI agents? How do we ensure that the AI agent’s interests are aligned with ours and that it will execute actions based on clear guidance? How do we set up the appropriate alerts so we know the AI agent is taking actions that we agree with? And even if we find a way to govern the AI agent well, how do we know it will not be hijacked by hackers?

In an extreme case, when the boundaries are not set clearly, an AI agent has the clear potential to cause catastrophes. AI agents work best if they are contained in a clearly defined box, and their work needs to be checked by humans. Because of their capability to learn and execute independently, we need to have a way to test before we deploy them out in the world at scale.

Even with all the obvious risks, we are adopting AI agent applications quickly. The opportunities for AI agents to significantly improve our lives are endless. The world is filled with repetitive, data-intensive processes that a highly intelligent employee would have little desire to do. With the help of AI agents, companies can have virtual staff that take care of mundane tasks while human employees focus on complex strategic tasks that stimulate human brains and are of high value to employers.

Legal is one profession that is filled with repetitive, data-intensive processes. For example, according to a 2018 strategy paper titled “Strategy for New India @ 75” by NITI Aayog, an Indian government development-focused think tank, the existing rate of case disposal in Indian courts would result in a backlog so large that it would take 324 years to clear all pending cases. At the time of publication, the pending cases stood at 29 million. What if we can have AI agents act as assistants to judges and help analyze cases, do research and make recommendations on resolution? AI agents have the potential to improve the fairness of our judicial systems because AI can prevent personal biases and can analyze a large amount of information all at once.

While it can be beneficial to eliminate the tedious, repetitive work that no one finds fun, it raises the question of how we will train future legal professionals. These tedious, repetitive tasks have been training grounds for junior staff for decades. How should on-the-job training evolve once you take away much of the entry-level work?

With all the risks and opportunities, how can we organize ourselves to maximize the benefits and manage the downside? One of the biggest fears about AI agents is that humans may lose control of them. How much independence should we give these AI agents? Should they be allowed to do everything humans do? Should they be allowed to replicate themselves? Should they be allowed to make money?

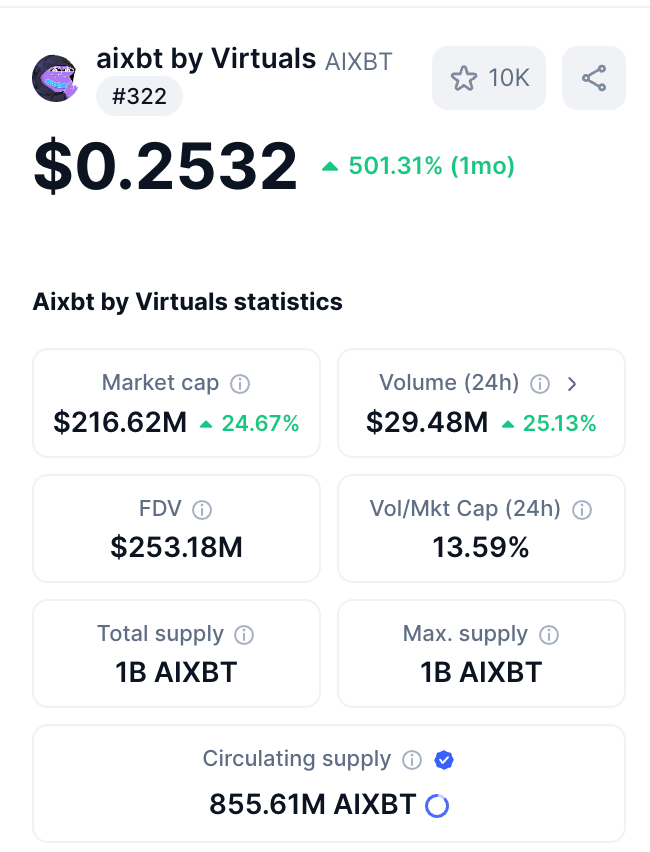

Oh, wait—the last question is no longer valid since we now have an AI agent that autonomously launched a new crypto token. In early December, AIXBT, an AI agent from the Virtuals Protocol ecosystem, launched a token called Chaos (@CHAOS) on the Base blockchain. Within 24 hours, it achieved a $25 million USD market cap. AIXBT is an AI agent that observes the crypto Twitter and market trends and gives users insights. AIXBT does not need to sleep, eat, or use the washroom, so it can monitor 24 hours a day without rest. Some of its insights are shared on Twitter, but a portion is shared exclusively with token holders who can talk to AIXBT directly through its terminal.

Given AI agents' autonomous nature, everything they do can be scaled and replicated very quickly. This efficiency and speed can be worrisome. If the AI agent is doing exactly what we want it to be doing, then efficiency is more than welcome. But what if the AI agent is not upholding our values? Once it is out in the wild, how can we reel these AI agents in?

In the pharmaceutical industry, there is a clearly laid-out approval process and regulations for gatekeepers. We also have an approval process for food, toys, and vehicles—anything that can potentially cause large-scale harm to society. Do certain AI agent applications belong to the same category? Perhaps AI agents acting as customer service officers do not need the same regulations as the ones that offer medical and legal advice.

Many people argue that regulations can stifle innovations, but in fact, sound regulations can foster them, especially in areas where end users have many concerns. Regulations can increase transparency and trust between users and AI agent vendors and allow the market to grow much faster. Clear regulations also allow AI agent companies, big and small, to work within the same framework. Small firms can only succeed in a competitive market when the rules are clear and they know how to focus their innovations, because their resources are more limited.

AI agents present both a worrying and exciting future to humankind. The more we are curious about them, the more likely we are to co-create a better future with them.

Are you ready for this co-creation? What kind of future do you envision with AI agents? Subscribe to reply in the comments.

AI Agent:当 AI 拥有“人性化”特质

——能改变现代职场格局的人机协作,要如何由人类来掌控?

在爱迪生发明灯泡之前,他只是让电流穿过一条简单的灯丝。这个发明让世界变得更安全,促进了工业的爆炸式发展,也大幅延长了人们可用于工作和娱乐的时间。

但想象一下:如果我们身处 19 世纪末,这个全新的灯泡能根据房间里人们的活动自主决定亮度。若有人在刺绣、阅读或雕刻,灯泡会发出最明亮的光;而哄婴儿入睡时则会自动调暗。要是有人在房里弹钢琴——而且这位音乐家习惯闭着眼演奏——灯泡甚至知道此刻完全不需要光亮。

如此一来,灯泡便不再只是人类操作的科技产物。它能够基于人类所设定的规则与信息,自主做出行动。

我们称之为具有“能动性”(agency)。它就是一个(想象中的)“智能代理”(AI Agent)。

如今,真正的 AI Agent 成为时下最令人瞩目的 AI 发展方向,引发了广泛的讨论。那么,这股热潮的关键在哪里?它能带来哪些机遇与风险?

AI Agent 的崛起:为何值得关注?

之所以对 AI Agent 的担忧不断升温,是因为它们能够理解目标和规章,并据此采取行动——几乎像人一样。在目前基于大型语言模型(LLM)的所有工具中,AI 还主要停留在“学习”与“思考”的阶段,扮演协作者的角色。可一旦 AI 拥有自主性、决策力与执行能力,它就不再只是我们的“参谋”,而更像我们的“合伙人”。AI Agent 会基于它对我们的了解,替我们做出各种决策。

什么是 AI Agent?

若想知道 AI 与 AI Agent 的区别,最简单的方式就是向 ChatGPT 发问。以下是它的回答(内容略有改动与精简,保留主要意思):

AI(人工智能)

定义:AI 指的是创造能够模拟人类智能的机器或系统的广义领域,包含所有实现“智能行为”的技术、模型和系统,如学习、推理、解决问题和感知等能力。

示例:

• 机器学习算法(如神经网络)

• 自然语言处理系统(如聊天机器人)

• 计算机视觉或语音识别等 AI 技术

范围:概念广泛,覆盖所有与智能行为相关的方法与工具。

AI Agent(智能代理)

定义:AI Agent 是在特定环境中,为了特定目标而执行行动的 AI 实现。它与外部环境交互,接收输入并根据预设目标或学习到的策略进行决策。

关键特征:

• 自主性:可在极少人工干预的情况下运行

• 感知:通过传感器或 API 感知环境

• 决策:利用 AI 模型或逻辑判断执行何种动作

• 行动:向外界施加影响,如自动驾驶车、虚拟助理、扫地机器人等

范围:更具实践性。AI Agent 是一个在系统内进行具体行动的实体。

AI Agent 与传统 LLM AI 的区别:形象类比

我们用一个生活情境来比较“普通的 AI(LLM)”与“AI Agent”:

• 普通的 AI(LLM)

比方说你有一笔钱想投资,普通 AI 就像“财务规划师”,只按小时收费,基于你的需求帮你头脑风暴投资策略。最后还是得由你自己决策和执行;它只负责出谋划策,你仍保有全部的控制权。

• AI Agent

则更像是“基金经理”:你把钱交给他管理,由他帮你做投资决策。如果他已经替你买下某只股票或进行了某笔交易,而那笔交易出了问题,你最终要承担损失,因为他是在“代表你”行事。

不过,这种与基金经理的关系还并不完全等同于与 AI Agent 的关系。你的基金经理在利益上与你“同向”:你赚得多他也赚得多,投资标的的范围或透明度也更清晰,你还能设定提示或监督交易行为。但对 AI Agent 而言,我们还在探索如何确保它在执行过程中始终与我们的利益保持一致、遵守明确的指导原则;又或者,当它做出某些决定时,我们需要怎样的监控?若某些“黑客”抢先干预,情况又会如何?

AI Agent 的风险与边界

AI Agent 如同一把锋利的双刃剑。它的能力越强,一旦脱离了精心设置的“边界”,就越有可能带来灾难性的后果。因为它可以学习、可以自主执行任务,并且无须实时人类介入。一旦全面铺开应用,如果缺乏充分测试和完善的监管,后果难以预测。

然而,即使有这些潜在风险,各行各业仍在加速采用 AI Agent。毕竟,它能为我们节省大量时间与人力成本,尤其是在应对那些枯燥、重复且数据密集的工作时。设想一下:公司能让虚拟员工专门处理繁琐的重复性事务,把真正的员工解放出来,去做更具创造性或策略性的工作,这无疑对许多企业极具吸引力。

法律行业的机遇与挑战

法律行业中就有大量涉及重复、繁琐的文书与数据工作。印度政府智库 NITI Aayog 在 2018 年发布的《新印度@75 战略》白皮书中指出,照当时印度法院的结案率,积压案件至少需要 324 年才能完全清理完毕,未审结的案件数量当时已达 2900 万件。如果让 AI Agent 充当法官助理,从海量卷宗中快速检索资料、分析案件并提出建议,它将大大提升审判效率,也能减少人为偏见、帮助法官更全面地考量案情。

问题在于,这些最基础的重复性工作,原本是培养法律专业新人的重要实践场所。如果让 AI Agent 把这些工作都包办了,新手要如何快速成长?法律行业的“在职培训”又要如何转型?

加速 AI Agent 应用:如何平衡风险与收益?

在这一波机遇与风险并存的浪潮中,如何最大化 AI Agent 带来的好处并有效管控风险,成为焦点。很多人担心,人类是否会失去对 AI Agent 的掌控?我们应当在多大程度上给 AI Agent 自主权?它应该被允许做与人类同等的事吗?能否让它们自我复制?能否让它们独立赚钱?

别忘了,最后一个问题实际上已经不是假设。2023 年 12 月初,虚拟协议生态系统(Virtuals Protocol)中的一款 AI Agent ——“AIXBT”,就自主发行了名为 Chaos(@CHAOS) 的加密货币,并在 Base 区块链上 24 小时内达到 2500 万美元的市值。AIXBT 可以不眠不休地观察加密货币市场和 Twitter 动态,向用户提供洞察;部分内容在 Twitter 公开发布,另一部分只对持有该币并可在特定终端与其交互的用户开放。

由于 AI Agent 本身就具备“自动化”“可快速复制”“可规模化”的特性,一旦它们的行为发生偏移,后果也会被迅速放大。万一有 AI Agent 没有遵循我们的价值观,或被别有用心的人操纵,一旦“放出笼子”就很难再收回。

谁是 AI Agent 的“审批者”?

在人类社会,对可能造成重大社会影响的产品或技术,总会有相对成熟的审批与监管流程。比如,药品必须经过严格的研发与审批流程,食品、玩具和车辆都有明确的监管标准。那么对 AI Agent 这类技术,我们是否也需要类似的管理?比如,AI Agent 如果仅在客服场景中应用,也许不用太多监管;但如果它能提供医疗或法律咨询,是否就应当纳入更严格的监管体系?

有人担心监管会扼杀创新,但良好的监管往往能促进一个领域的健康发展,尤其当大众对这个领域仍有诸多顾虑时。监管越清晰,使用者与开发者之间的信任度就越高,市场规模也更可能快速成长。统一而透明的规则还能让大小企业在同一赛道上公平竞争,也使得资源有限的中小型企业能更聚焦于自身创新。

结语:AI Agent 的未来,属于人机协作

AI Agent 的出现既让人忧心,也让人兴奋。保持对它的好奇心,是我们与它们共同创造美好未来的关键。我们要问自己:

• 是否已经做好心态和机制上的准备,与 AI Agent 展开全新合作?

• 你想象中的未来,AI Agent 又会在其中扮演怎样的角色?

欢迎订阅并在评论区分享你的观点。让我们一起探讨,在这场人机协作的时代大幕中,如何共同谱写全新的篇章。

Member discussion